Harnessing AI ethically in nonprofits: From policy to practice

Paola Galvez Callirgos, Globethics Non-resident fellow for AI Ethics

26 May 2025

Artificial Intelligence (AI) offers remarkable potential, but it also raises important ethical challenges. In the context of rapid evolutions, nonprofit organisations are beginning to explore how these AI tools can support their missions, from improving grant applications to enhancing decision-making.

In a recent webinar titled "Building Trustworthy AI Policies for Your Nonprofit" co-organised by PoliSync, Caux Foundation, and Globethics, I shared insights on how nonprofits can engage with AI in a way that is ethical, effective, and aligned with global principles. This blog builds on that presentation, offering an overview of AI use cases in the nonprofit sector, the ethical questions that arise, foundational ethical principles, and a practical roadmap to help your organisation develop a sound AI governance framework.

AI use cases in the nonprofit sector

Nonprofits have the opportunity to leverage AI across diverse areas to amplify their mission and drive greater impact. Below are key AI use cases organised into four categories, showcasing how this technology can support and empower nonprofit work.

Fundraising

AI can transform fundraising by enabling smarter donor segmentation and engagement. By analysing giving patterns and preferences, AI can help nonprofits tailor their outreach for more effective grant applications. AI-powered tools also streamline grant proposal writing, for instance, Fundraising.ai is an American community that promotes the development and use of responsible AI for nonprofit fundraising.

Community care

Personalised communication is key to strengthening relationships with supporters. AI provides an opportunity for nonprofits to customise messaging and boost engagement and donation rates. Integrating AI into Customer Relationship Management (CRM) systems can further enhance donor management by providing timely insights and engagement strategies.

Content creation

Creating compelling content is essential for outreach and advocacy. AI can assist nonprofits in generating website materials, helping maintain a consistent and impactful voice. Additionally, AI can support social media management by creating, scheduling, and optimising posts to maximise audience engagement.

Operations

Behind the scenes, AI can improve operational efficiency through advanced data analysis. It can help nonprofits evaluate program effectiveness and predict donor giving propensity. AI tools can also automate meeting summarisation and note-taking, streamlining internal communication and collaboration.

Ethical Dilemmas

The integration of AI into nonprofit operations presents a complex web of ethical considerations that organisations must navigate with considerable care.

Bias and discrimination

Perhaps the most pressing concern lies in the realm of bias and discrimination, where AI systems can perpetuate and amplify existing social inequities. When these systems are trained on datasets that reflect historical prejudices or fail to adequately represent diverse populations, they risk causing genuine harm to the vulnerable communities that nonprofits seek to serve. This challenge is particularly acute given that the majority of AI tools are developed in contexts far removed from the realities faced by marginalised groups.

Privacy and data protection

Nonprofits often work with highly sensitive personal information, whether dealing with healthcare provision, supporting refugees, or operating in conflict zones. The collection and processing of such data for AI applications raises fundamental questions about beneficiaries' rights to privacy and consent, particularly when individuals may have limited understanding of how their information will be processed.

Digital divide

The digital divide presents yet another ethical dilemma, as AI tools may exclude those who lack reliable internet access or digital literacy. If a nonprofit decides to use a chatbot powered by AI on their website, rather than reducing inequalities as intended, this system could risk creating new forms of exclusion, potentially leaving behind the most vulnerable members of society.

Transparency and explainability

Many AI systems operate as opaque "black boxes," making it extraordinarily difficult to explain their decision-making processes to stakeholders, beneficiaries, or regulatory bodies. This lack of transparency can seriously undermine trust and accountability, fundamental pillars upon which nonprofit credibility rests. When beneficiaries cannot understand why certain decisions have been made about their care or support, the relationship between organisation and community can be severely damaged.

AI Principles

Given the complex ethical landscape outlined above, organisations considering AI implementation must ground their approach in robust ethical principles. The UNESCO Recommendation on the Ethics of AI provides an essential starting point for any organisation embarking on AI adoption, offering a comprehensive framework that addresses the multifaceted challenges inherent in AI deployment.

At the foundation of ethical AI practice lies the following principles:

- Proportionality and Do No Harm

- Safety and Security

- Fairness and Non-Discrimination

- Privacy and Data Protection

- Human Oversight and Determination

- Transparency and Explainability

- Responsibility and Accountability

Practical steps for building your organisation's AI governance framework

Building a robust AI governance framework requires a systematic approach that prioritises human values whilst embracing technological opportunities. The following steps provide a roadmap for nonprofits seeking to implement AI ethically.

Cultivate leadership and understanding

The foundation of effective AI governance begins with organisational commitment and a transformative mindset from leadership. Leaders must actively engage with the technology through use cases that align with their organisation's mission. This hands-on approach builds genuine understanding and helps identify realistic applications that can drive meaningful impact.

Establish an AI governance council

Comprising representatives from different expertise areas, this multidisciplinary team ensures that AI decisions consider diverse perspectives. The council serves as a decision-making body and a knowledge-sharing forum, helping to democratise AI understanding across the organisation.

Human-centred approach

Actively involve your team in discussions about AI adoption, ensuring that staff understand how these technologies might impact their work and addressing their concerns transparently. The goal is not to replace human judgment, but to augment it.

Regular team consultations, training sessions, and feedback mechanisms help build trust and ensure that AI implementations align with organisational values and culture. When people feel included in the process, they become advocates for positive change.

Map before you leap

Before considering any technological intervention, organisations must thoroughly map their existing processes, understand their data landscape, and identify genuine areas for improvement. This diagnostic phase reveals whether issues stem from workflow inefficiencies, resource allocation problems, or gaps that technology might address.

Only after completing this assessment should organisations evaluate whether AI represents the most appropriate solution.

Develop comprehensive AI policies

Once the groundwork is established, organisations need a clear AI framework. This policy should cover at least data handling, algorithmic transparency, accountability mechanisms, regular review processes for bias to prevent unintended outcomes, and assign responsibility for AI outcomes to specific roles within your organisation.

Mixed perceptions

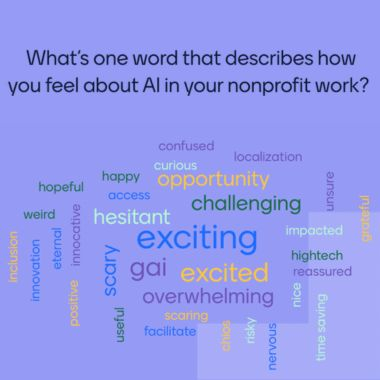

During the webinar, I asked the more than 100 participants to share one word that describes how they feel about AI in their nonprofit work.

The responses, visualised in this world cloud, offer a powerful snapshot of the complex emotions AI evokes across the sector. Words like “exciting”, “opportunity”, and “innovative” stood out, but so did “overwhelming”, “overwhelming”, “hesitant”, and “challenging”.

This emotional mix captures both the promise and the uncertainty surrounding AI adoption in mission-driven environments. These insights align closely with the findings of the Ipsos AI Monitor 2024, which revealed that 53% of people are excited about AI-powered products and services, while 50% feel nervous about the technology.

And you, how do you feel about AI in your nonprofit work? Are you ready to leverage AI for good?